A closely-linked network of several score brilliant men and a few women are pushing the boundaries of artificial intelligence research. You’ll meet many of these high-achieving and sometimes eccentric individuals in the pages of Genius Makers. You’ll get a glimpse inside Google, Facebook, Baidu, and other major institutions where most of the cutting-edge AI research is underway. And in these pages, you’ll gain perspective on the issues and uncertainties that trouble this rarefied community. In a more general sense, Genius Makers will also show how the shifting currents of peer pressure influence the course of scientific research.

Estimated reading time: 9 minutes

One approach among many

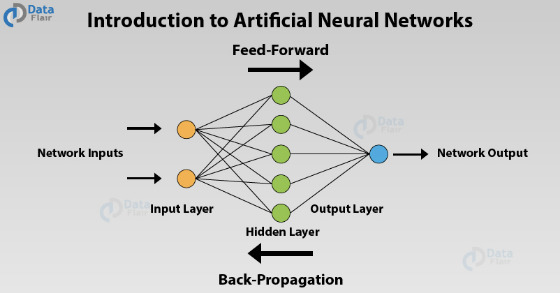

The principal theme in this important new book is the emergence of the promising approach to artificial intelligence that has become dominant in the field. Called deep learning, it’s grounded in artificial neural networks, which are loosely modeled on the human brain. In a neural network, scientists link together units or nodes called artificial neurons. The patterns they form allow the machine to learn from experience in a way analogous to learning in humans. Scientists “train” neural networks by exposing them to massive amounts of data. For example, to “teach” a neural network to recognize cats, they might feed it millions of images of cats. In the process, the neural network acquires an accurate enough picture of cats that it’s able to produce a credible cat image of its own. It doesn’t “understand” cats, but it will recognize an image of one.

The emergence of deep learning

For decades, deep learning had few adherents in the sixty-year-old field of artificial intelligence. A competing approach called “symbolic AI” held sway. “Whereas neural networks learned tasks on their own by analyzing data, symbolic AI did not.” Only a handful of maverick scientists stubbornly persisted through the dark ages beginning in the 1970s before more powerful computers allowed their work on neural networks to live up to its promise. Suddenly, the barrier in AI research was broken. The key was an important peer-reviewed article in 2012. It was “one of the most influential papers in the history of computer science,” attracting more than 60,000 citations.

The high-profile events that have brought AI to the world’s attention in recent years are all based on deep learning. For example, the defeat of the world’s top chess masters and Go champions. The increasing facility of machines in understanding spoken language. The advances made in self-driving cars. And the now-widespread use of face recognition. In Genius Makers, New York Times technology reporter Cade Metz profiles the scientists who made all this possible—for good or ill.

Genius Makers: The Mavericks Who Brought AI to Google, Facebook, and the World by Cade Metz (2021) 382 pages ★★★★☆

Seven key players

In an appendix labeled “The Players,” Metz lists sixty-one of the characters whose names appear in the book. Seven of these—all men—play central roles in the drama, but one stands out above the others. I’ll start with him, then list the other six in alphabetical order by last name.

Geoff Hinton

British-Canadian cognitive psychologist and computer scientist Geoff Hinton (born 1947) Is the grand old man of the researchers profiled in Genius Makers. If anything, he is the central figure in this story, the founding father of the deep learning movement. As Metz puts it, “Hinton and his students changed the way machines saw the world.” It was he who stubbornly continued to advocate for the use of neural networks in developing artificial intelligence in the face of near-universal disapproval within the field. A 1969 book by MIT legends Marvin Minsky and Seymour Papert was the cause. The book savagely attacked AI research using that approach and turned the tide against it for decades.

In Metz’s words, Hinton is “the man who didn’t sit down.” A back injury had prevented him from sitting for seven years when Metz arrived to interview him in December 2012. And Metz describes the elaborate arrangements Hinton must make when he travels. It’s quite remarkable that the man could function at all.

Hinton teaches at the University of Toronto. He joined Google in 2013 but lives and continues to work with his students in Canada. Hinton received the 2018 Turing Award, together with Yoshua Bengio and Yann LeCun, for their work on deep learning. He is the great-great-grandson of logician George Boole (1815-64). Boole’s work in mathematics (Boolean algebra) much later helped ground the new field of computer science.

Yoshua Benguio

French-born Yoshua Benguio (born 1964) is a computer science professor at the Université de Montréal. Along with Geoff Hinton and Yann LeCun, Benguio advanced the technology of artificial neural networks and deep learning in the 1990s and 2000s when the world’s AI community had turned their backs on the technique.

Demis Hassabis

Demis Hassabis (born 1976) is, in Metz’s words, a “British chess prodigy, game designer, and neuroscientist who founded DeepMind, a London AI start-up that would grow into the world’s most celebrated AI lab.” DeepMind was acquired by Google in 2014. Hassabis and his team at DeepMind developed the extraordinary AI named AlphaGo. In 2016, AlphaGo beat Lee Sedol, the world’s champion at Go, which many consider the world’s most difficult game. But the AI’s programming wasn’t limited to playing games. In 2020, DeepMind made significant advances in the problem of protein folding, expanding the boundaries of AI research in medical science.

Alex Krizhevsky

Alex Krizhevsky, born in Ukraine and raised in Canada, was a brilliant young protégé of Geoff Hinton at the University of Toronto. He played a leading role in developing computer vision, which is now central to face recognition and numerous other applications. Krizhevsky was one of Hinton’s partners in a startup they sold to Google in 2013. Together with Geoff Hinton, he “showed that a neural network could recognize common objects with an accuracy beyond any other technology.” Krizhevsky joined Google Brain and the Google self-driving car project but left the company in 2017. His work is widely cited by computer scientists.

Yann LeCun

Yann LeCun was born in France in 1960 but for decades has worked in the United States, first at Bell Labs and then at New York University, where he holds an endowed chair in mathematical sciences. In addition to teaching in New York, he also oversees Facebook’s Artificial Intelligence Research Lab. Like others portrayed in these pages, LeCun long collaborated with Geoff Hinton on deep learning before signing up with Facebook.

Andrew Ng

Andrew Ng, born in Britain in 1976, is an adjunct professor at Stanford University with a long colorful history in machine learning and AI. He co-founded Google’s deep learning research team Google Brain; managed Baidu‘s Silicon Valley lab as the Chinese company’s chief scientist; co-founded the pioneering MOOC (massive open online course) company Coursera, through which he taught more than 2.5 million students online. And since 2018 he has run a venture capital fund that backs startups in artificial intelligence.

Ilya Sutskever

Canadian computer scientist Ilya Sutskever, another of Geoff Hinton’s brilliant young protégés, gravitated with him to Google Brain when they sold their startup company to the Silicon Valley giant. But he left Google to join OpenAI, an AI lab in San Francisco backed by Elon Musk to compete with Google’s London-based DeepMind. Sutskever has made important contributions to the field of deep learning, among them co-inventing AlphaGo.

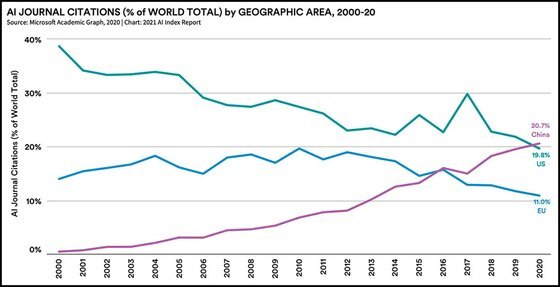

Where is the most advanced research now?

About the author

Cade Metz covers AI research, driverless cars, robotics, virtual reality, and other emerging technologies for the New York Times. He had earlier worked for Wired magazine. Genius Makers is his first book. James Fallows’ review of the book, along with a second one on AI by another Times reporter, recently appeared in the paper’s Sunday Book Review. Fallows explains, “Much of Metz’s story runs from excitement for neural networks in the early 1960s, to an ‘A.I. winter’ in the 1970s, when that era’s computers proved too limited to do the job, to a recent revival of a neural-network approach toward ‘deep learning,’ which is essentially the result of the faster and more complex self-correction of today’s enormously capable machines.”

In the notes at the conclusion of the text, Metz describes the research he conducted in writing Genius Makers. “This book is based,” he writes, “on interviews with more than four hundred people over the course of the eight years I’ve been reporting on artificial intelligence for Wired magazine and then the New York Times, as well as more than a hundred interviews conducted specifically for the book. Most people have been interviewed more than once, some several times or more.”

For related reading

Check out 30 good books about artificial intelligence. I especially recommend The Big Nine: How the Tech Titans and Their Thinking Machines Could Warp Humanity by Amy Webb (An artificial intelligence skeptic paints a chilling picture of a future dominated by AI).

You might also enjoy:

- 5 best books about Silicon Valley

- 10 best books about innovation

- Science explained in 10 excellent popular books

- Good nonfiction books about the future (plus lots of science fiction)

- My 10 favorite books about business history

And you can always find my most popular reviews, and the most recent ones, on the Home Page.